Here’s our second post in the RDO + Hyper-V series targeting Havana and automation.

We had lots of great feedbacks about the first post, along with many requests about how to troubleshoot network connectivity issues that arise quite often when you configure a complex environment like a multi-node OpenStack deployment, especially for the first time!

Although RDO is a great solution to automate an OpenStack deployment, it still requires quite a few manual steps, especially in a multi-node scenario. Any small configuration error in one of those steps can lead to hours of troubleshooting, so we definitely need an easy foolproof solution.

In this post we’ll detail a “simple” script available here that will perform all the configuration work for you.

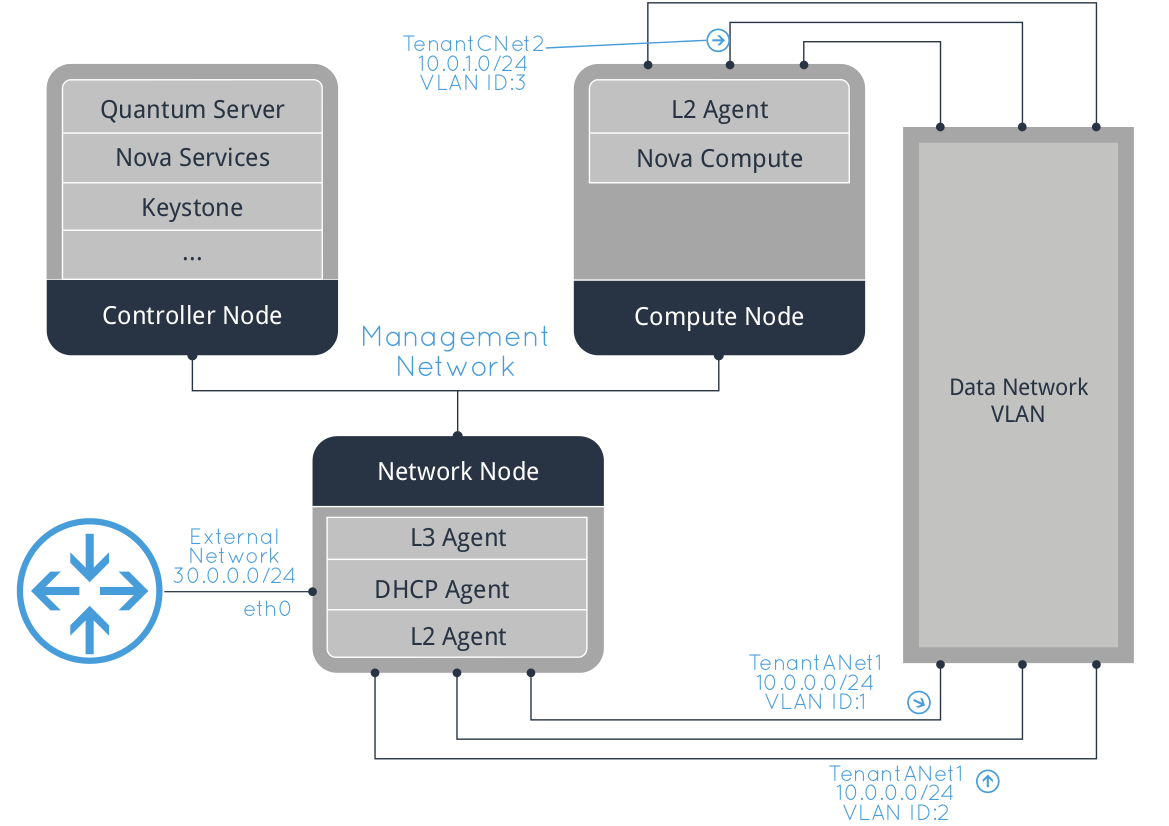

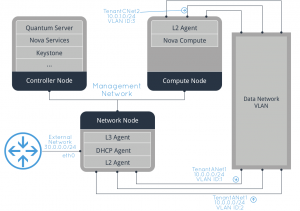

Here’s a diagram of what we are going to obtain (click to enlarge):

![OpenStack_multi_node_network]()

Physical network layout

Network connectivity is very important, so let’s recap how the hosts need to be connected.

We’ll use 3 separate networks:

1. Management

This network will be used only for setting up the hosts and for HTTP and AMQP communication among them. It requires Internet access during the initial configuration. Guests must not be able to access this network under any circumstance.

2. Data (guest access)

Network used by the guests to communicate among each other (in the same Neutron network) and with the Network host for external routing.

Since we are going to use VLANs for network isolation in this example, make sure that your switches are properly configured (e.g. trunking mode).

3. External

Used by the Network host to communicate with external networks (e.g. Internet).

Hosts OS configuration

Now, we’ll need to get the hosts running. If you want to create a proof of concept (PoC), virtual machines can be absolutely enough as long as they support nested virtualization, while for a real production environment we suggest to use physical machines for all the nodes except optionally the controller. In this example we’ll use the “root” / “Passw0rd” credentials on all Linux hosts and “Administrator” / “Passw0rd” on Hyper-V.

Controller host

RAM

At least 1GB, 2-8GB or more if you plan to use Ceilometer on this node.

HDD

At least 10GB, recommended at least 100GB if you plan to store the Glance images here.

CPU

1-2 sockets, not particularly relevant in most circumstances.

Network

- eth0 connected to the Management network.

Network host

RAM

1GB

HDD

10GB or even less, this host has really no particular disk space requirements

CPU

1-2 sockets, not particularly relevant unless you use GRE / VXLAN tunnelling.

Network

- eth0 connected to the Management network.

- eth1 connected to the Data network.

- eth2 adapter connected to the External network.

KVM compute host (optional)

Multiple compute nodes can be added based on your requirements. We’ll use one in our example for the sake of simplicity.

RAM

A minimum of 4GB, Recommended? As much as possible. ![:-)]() This really depends on how many VMs you want to run. Sum the amount of memory you want to assign to the guests and add 1 GB for the host. Avoid memory overcommit if possible. 64, 128, 192 GB are quite common choices.

This really depends on how many VMs you want to run. Sum the amount of memory you want to assign to the guests and add 1 GB for the host. Avoid memory overcommit if possible. 64, 128, 192 GB are quite common choices.

HDD

A minimum of 10GB. This is based on the local storage that you want to assign to the guests, I’d start with at least 100GB.

CPU

2 sockets, as many cores as possible, based on the number of guests. For example 2 esacore sockets with multithreading enabled provide 12 usable CPU threads to assign to the guests.

Network

- eth0 adapter connected to the Management network.

- eth1 adapter connected to the Data network.

Hyper-V compute host (optional)

The same consideration discussed for the KVM compute node apply to this case as well.

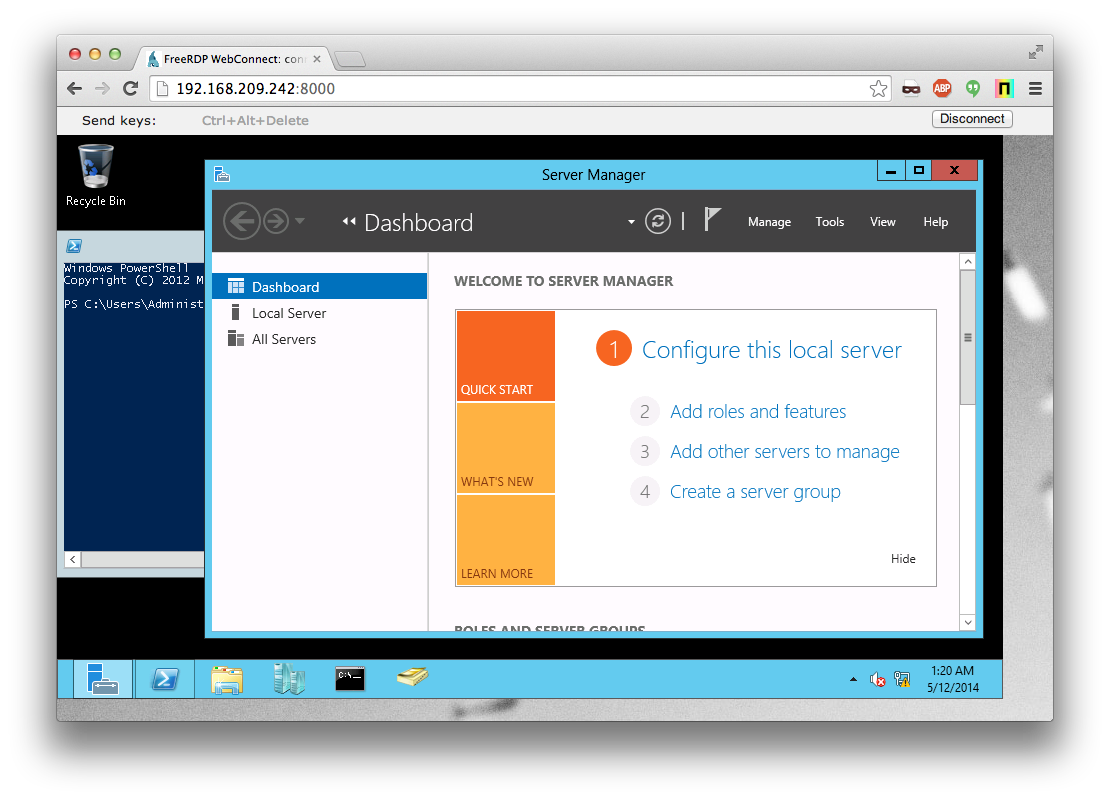

You can just perform a basic installation and at the end enable WinRM by running the following Powershell script that will download OpenSSL, configure a CA, generate a certificate to be used for HTTPS and configure WinRM for HTTPS usage with basic authentication: SetupWinRMAccess.ps1

$filepath = "$ENV:Temp\SetupWinRMAccess.ps1"

(new-object System.Net.WebClient).DownloadFile("https://raw.github.com/cloudbase/openstack-rdo-scripts/master/SetupWinRMAccess.ps1", $filepath)

Set-ExecutionPolicy RemoteSigned -Force

& $filepath

del $filepath

Configure RDO

Now, let’s get the management IPs assigned to the hosts. In our examples we’ll use:

- Controller: 192.168.200.1

- Network: 192.168.200.2

- KVM compute: 192.168.200.3

- Hyper-V compute: 192.168.200.4

You can already start the script and let it run while we explain how it works afterwards. It runs on Linux, Mac OS X or Windows (using Bash).

git clone https://github.com/cloudbase/openstack-rdo-scripts

cd openstack-rdo-scripts

ssh-keygen -q -t rsa -f ~/.ssh/id_rsa_rdo -N "" -b 4096

./configure-rdo-multi-node.sh havana ~/.ssh/id_rsa_rdo rdo-controller 192.168.200.1 rdo-network \

192.168.200.2 rdo-kvm 192.168.200.3 rdo-hyperv 192.168.200.4

If you are not deploying Hyper-V, you can simply omit the last two values of course.

Here’s a video showing what to expect (except the initial VM deployment part which is outside of the scope of this post):

The script explained

You can open it directly on Github and follow the next explanations:

1. Inject the SSH key

This is performed for each Linux host to enable the automated configuration steps afterwards.

configure_ssh_pubkey_auth $RDO_ADMIN $CONTROLLER_VM_IP $SSH_KEY_FILE_PUB $RDO_ADMIN_PASSWORD

configure_ssh_pubkey_auth $RDO_ADMIN $NETWORK_VM_IP $SSH_KEY_FILE_PUB $RDO_ADMIN_PASSWORD

configure_ssh_pubkey_auth $RDO_ADMIN $QEMU_COMPUTE_VM_IP $SSH_KEY_FILE_PUB $RDO_ADMIN_PASSWORD

2. Set the host date and time

update_host_date $RDO_ADMIN@$CONTROLLER_VM_IP

update_host_date $RDO_ADMIN@$NETWORK_VM_IP

update_host_date $RDO_ADMIN@$QEMU_COMPUTE_VM_IP

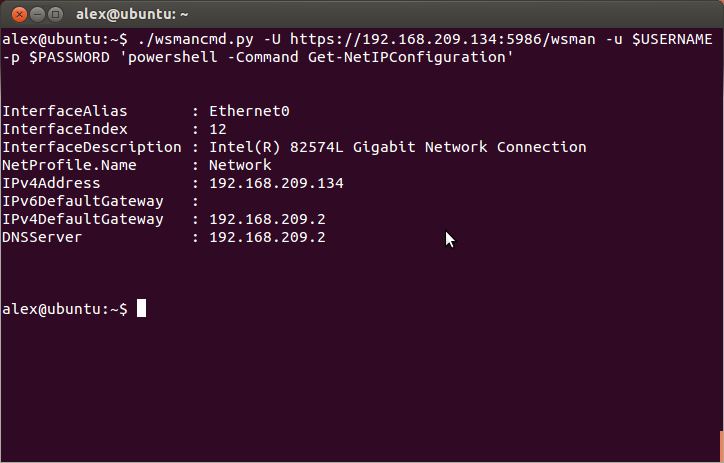

3. Rename the Hyper-V compute host (if present)

exec_with_retry "$BASEDIR/rename-windows-host.sh $HYPERV_COMPUTE_VM_IP $HYPERV_ADMIN \

$HYPERV_PASSWORD $HYPERV_COMPUTE_VM_NAME" 30 30

This uses a separate script which in turn is based on WSMan to call Powershell remotely (even from Linux!):

wsmancmd.py -U https://$HOST:5986/wsman -u $USERNAME -p $PASSWORD \

'powershell -NonInteractive -Command "if ([System.Net.Dns]::GetHostName() -ne \"'$NEW_HOST_NAME'\") \

{ Rename-Computer \"'$NEW_HOST_NAME'\" -Restart -Force }"'

4. Configure Linux networking on all nodes

This uses a function called config_openstack_network_adapter to configure the adapter’s ifcfg-ethx file to be used by Open vSwitch.

# Controller

set_hostname $RDO_ADMIN@$CONTROLLER_VM_IP $CONTROLLER_VM_NAME.$DOMAIN $CONTROLLER_VM_IP

# Network

config_openstack_network_adapter $RDO_ADMIN@$NETWORK_VM_IP eth1

config_openstack_network_adapter $RDO_ADMIN@$NETWORK_VM_IP eth2

set_hostname $RDO_ADMIN@$NETWORK_VM_IP $NETWORK_VM_NAME.$DOMAIN $NETWORK_VM_IP

# KVM compute

config_openstack_network_adapter $RDO_ADMIN@$QEMU_COMPUTE_VM_IP eth1

set_hostname $RDO_ADMIN@$QEMU_COMPUTE_VM_IP $QEMU_COMPUTE_VM_NAME.$DOMAIN $QEMU_COMPUTE_VM_IP

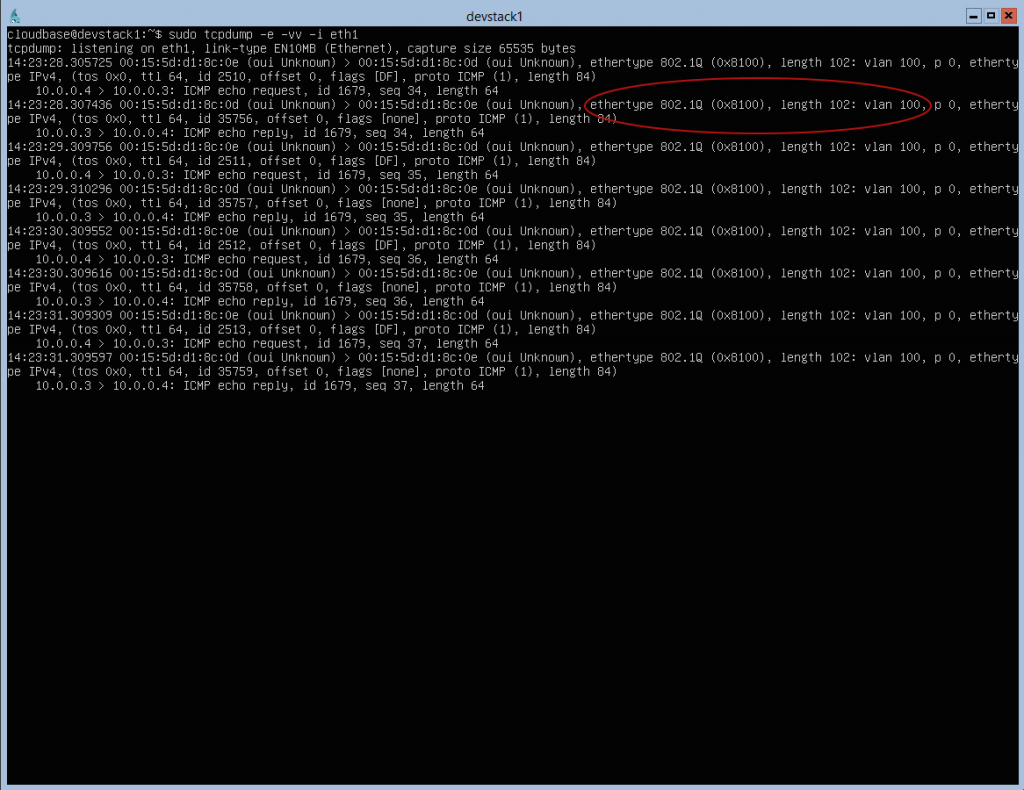

5. Test if networking works

This is a very important step, as it validates the network configuration anticipating potential issues!

Some helper functions are used in this case as well.

set_test_network_config $RDO_ADMIN@$NETWORK_VM_IP $NETWORK_VM_TEST_IP/24 add

set_test_network_config $RDO_ADMIN@$QEMU_COMPUTE_VM_IP $QEMU_COMPUTE_VM_TEST_IP/24 add

ping_ip $RDO_ADMIN@$NETWORK_VM_IP $QEMU_COMPUTE_VM_TEST_IP

ping_ip $RDO_ADMIN@$QEMU_COMPUTE_VM_IP $NETWORK_VM_TEST_IP

6. Install Packstack on the controller

run_ssh_cmd_with_retry $RDO_ADMIN@$CONTROLLER_VM_IP "yum install -y http://rdo.fedorapeople.org/openstack/openstack-$OPENSTACK_RELEASE/rdo-release-$OPENSTACK_RELEASE.rpm || true"

run_ssh_cmd_with_retry $RDO_ADMIN@$CONTROLLER_VM_IP "yum install -y openstack-packstack"

We’re also adding crudini to manage the OpenStack configuration files.

run_ssh_cmd_with_retry $RDO_ADMIN@$CONTROLLER_VM_IP "yum -y install http://dl.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm || true"

run_ssh_cmd_with_retry $RDO_ADMIN@$CONTROLLER_VM_IP "yum install -y crudini"

7. Generate a Packstack configuration file

run_ssh_cmd_with_retry $RDO_ADMIN@$CONTROLLER_VM_IP "packstack --gen-answer-file=$ANSWERS_FILE"

8. Configure the Packstack answer file

Here’s the part relevant to Havana.

run_ssh_cmd_with_retry $RDO_ADMIN@$CONTROLLER_VM_IP "\

crudini --set $ANSWERS_FILE general CONFIG_SSH_KEY /root/.ssh/id_rsa.pub && \

crudini --set $ANSWERS_FILE general CONFIG_NTP_SERVERS 0.pool.ntp.org,1.pool.ntp.org,2.pool.ntp.org,3.pool.ntp.org && \

crudini --set $ANSWERS_FILE general CONFIG_CINDER_VOLUMES_SIZE 20G && \

crudini --set $ANSWERS_FILE general CONFIG_NOVA_COMPUTE_HOSTS $QEMU_COMPUTE_VM_IP && \

crudini --del $ANSWERS_FILE general CONFIG_NOVA_NETWORK_HOST"

run_ssh_cmd_with_retry $RDO_ADMIN@$CONTROLLER_VM_IP "\

crudini --set $ANSWERS_FILE general CONFIG_NEUTRON_L3_HOSTS $NETWORK_VM_IP && \

crudini --set $ANSWERS_FILE general CONFIG_NEUTRON_DHCP_HOSTS $NETWORK_VM_IP && \

crudini --set $ANSWERS_FILE general CONFIG_NEUTRON_METADATA_HOSTS $NETWORK_VM_IP && \

crudini --set $ANSWERS_FILE general CONFIG_NEUTRON_OVS_TENANT_NETWORK_TYPE vlan && \

crudini --set $ANSWERS_FILE general CONFIG_NEUTRON_OVS_VLAN_RANGES physnet1:1000:2000 && \

crudini --set $ANSWERS_FILE general CONFIG_NEUTRON_OVS_BRIDGE_MAPPINGS physnet1:br-eth1 && \

crudini --set $ANSWERS_FILE general CONFIG_NEUTRON_OVS_BRIDGE_IFACES br-eth1:eth1"

9. Deploy our SSH key on the controller

The key will be used by Packstack to automate the SSH connection with all the nodes.

scp -i $SSH_KEY_FILE -o 'PasswordAuthentication no' $SSH_KEY_FILE $RDO_ADMIN@$CONTROLLER_VM_IP:.ssh/id_rsa

scp -i $SSH_KEY_FILE -o 'PasswordAuthentication no' $SSH_KEY_FILE_PUB $RDO_ADMIN@$CONTROLLER_VM_IP:.ssh/id_rsa.pub

10. Run Packstack!

This is obviously the most important step. Note the use of a helper function called run_ssh_cmd_with_retry to retry the command if it fails.

Transient errors in Packstack are quite common due to network connectivity issues, nothing to worry about.

run_ssh_cmd_with_retry $RDO_ADMIN@$CONTROLLER_VM_IP "packstack --answer-file=$ANSWERS_FILE"

11. Add additional firewall rules

Enable access from the Hyper-V host(s) and work around an existing Packstack issue in multinode environments.

run_ssh_cmd_with_retry $RDO_ADMIN@$CONTROLLER_VM_IP "iptables -I INPUT -s $QEMU_COMPUTE_VM_IP/32 -p tcp --dport 9696 -j ACCEPT"

run_ssh_cmd_with_retry $RDO_ADMIN@$CONTROLLER_VM_IP "iptables -I INPUT -s $NETWORK_VM_IP/32 -p tcp --dport 9696 -j ACCEPT"

run_ssh_cmd_with_retry $RDO_ADMIN@$CONTROLLER_VM_IP "iptables -I INPUT -s $NETWORK_VM_IP/32 -p tcp --dport 35357 -j ACCEPT"

if [ -n "$HYPERV_COMPUTE_VM_IP" ]; then

run_ssh_cmd_with_retry $RDO_ADMIN@$CONTROLLER_VM_IP "iptables -I INPUT -s $HYPERV_COMPUTE_VM_IP/32 -p tcp --dport 9696 -j ACCEPT"

run_ssh_cmd_with_retry $RDO_ADMIN@$CONTROLLER_VM_IP "iptables -I INPUT -s $HYPERV_COMPUTE_VM_IP/32 -p tcp --dport 9292 -j ACCEPT"

fi

12. Disable API rate limits

This is useful for PoCs and testing environments, but you most probably want to leave API rates in place in production.

>run_ssh_cmd_with_retry $RDO_ADMIN@$CONTROLLER_VM_IP "crudini --set $NOVA_CONF_FILE DEFAULT api_rate_limit False"

13. Enable Neutron firewall

Fixes a Packstack glitch.

run_ssh_cmd_with_retry $RDO_ADMIN@$CONTROLLER_VM_IP "sed -i 's/^#\ firewall_driver/firewall_driver/g' \

/etc/neutron/plugins/openvswitch/ovs_neutron_plugin.ini && service neutron-server restart"

14. Set KVM as the libvirt type on the KVM compute node

When running on a VM, Packstack sets always the libvirt_type to QEMU, which is not what we want.

run_ssh_cmd_with_retry $RDO_ADMIN@$QEMU_COMPUTE_VM_IP "grep vmx /proc/cpuinfo > \

/dev/null && crudini --set $NOVA_CONF_FILE DEFAULT libvirt_type kvm || true"

15. Configure Open vSwitch on the Network node

run_ssh_cmd_with_retry $RDO_ADMIN@$NETWORK_VM_IP "ovs-vsctl list-ports br-ex | grep eth2 || ovs-vsctl add-port br-ex eth2"

16. Reboot all the Linux nodes to load the new 2.6.32 Kernel with network namespaces support

run_ssh_cmd_with_retry $RDO_ADMIN@$CONTROLLER_VM_IP reboot

run_ssh_cmd_with_retry $RDO_ADMIN@$NETWORK_VM_IP reboot

run_ssh_cmd_with_retry $RDO_ADMIN@$QEMU_COMPUTE_VM_IP reboot

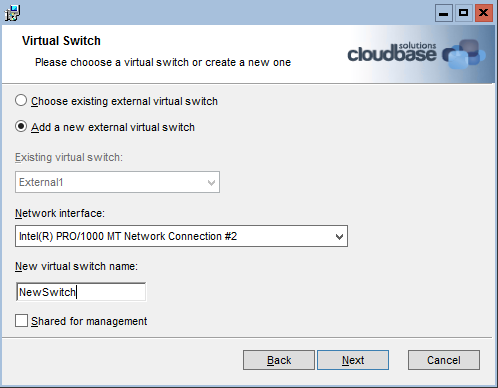

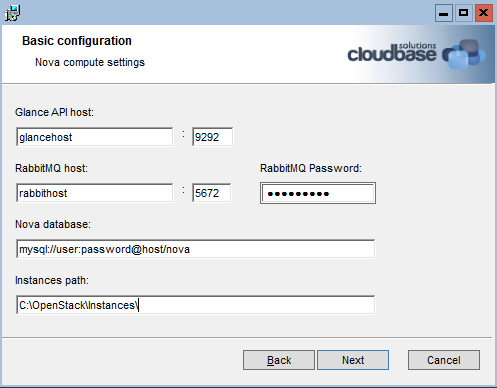

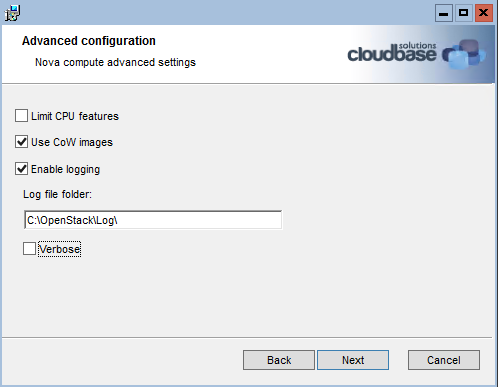

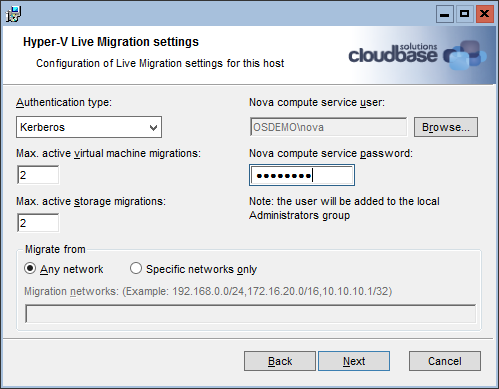

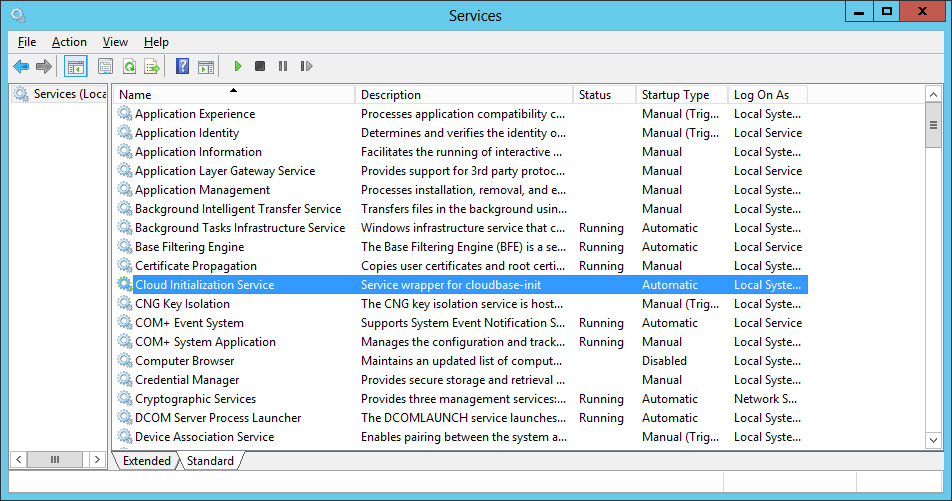

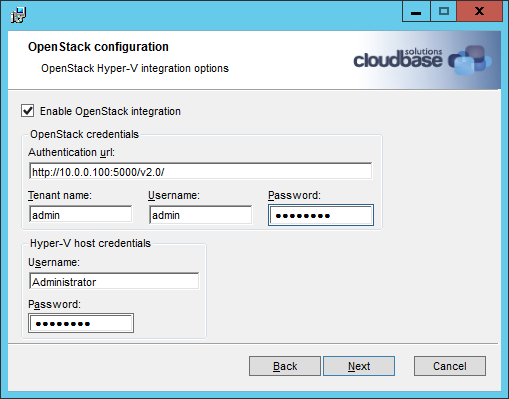

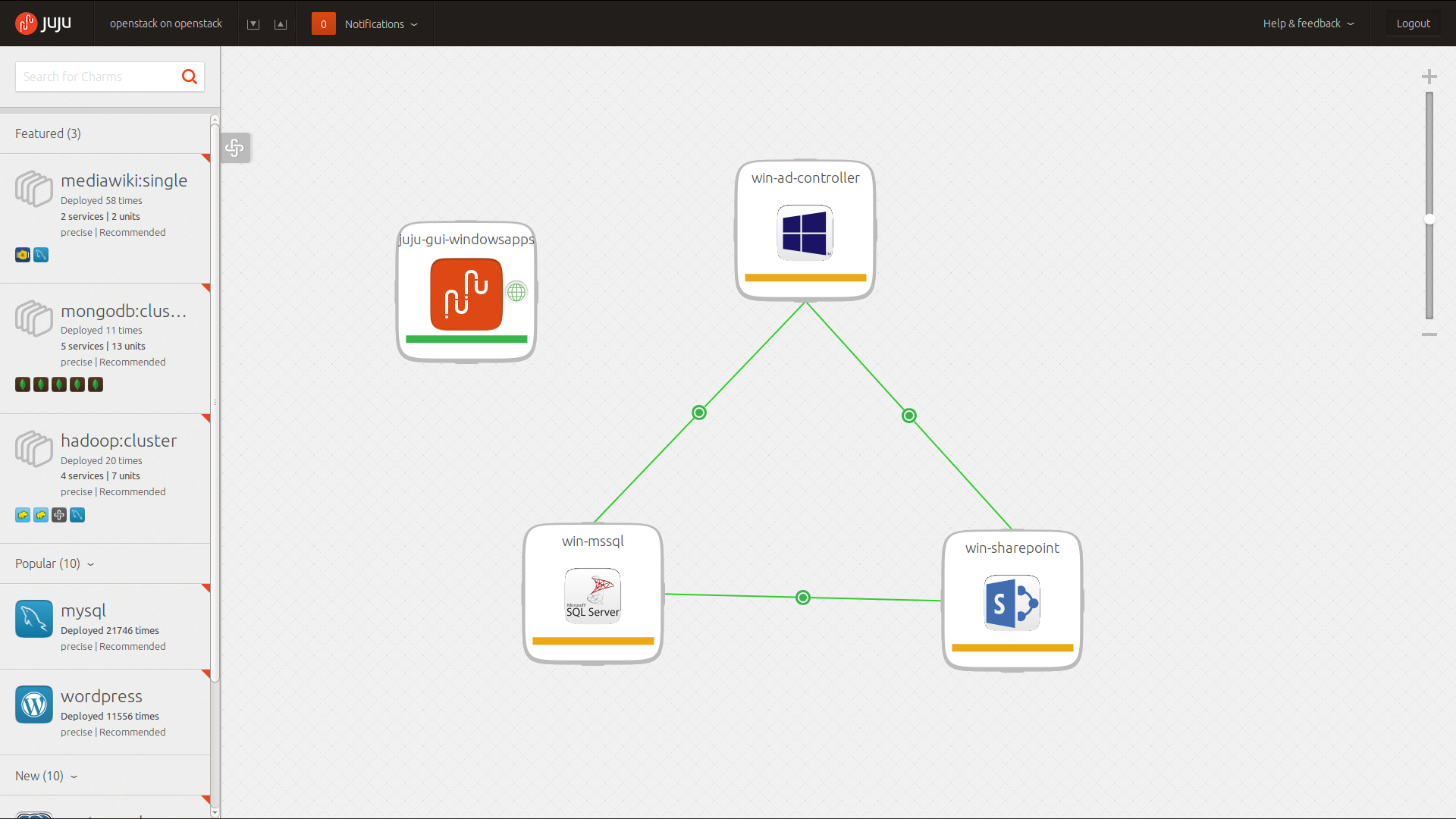

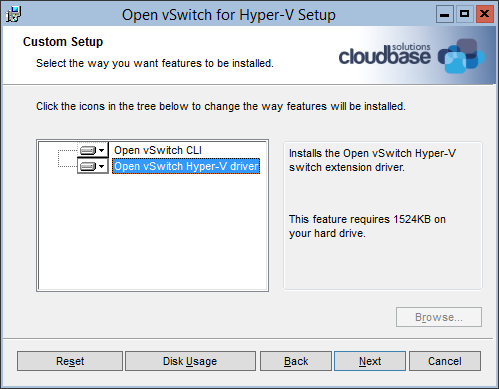

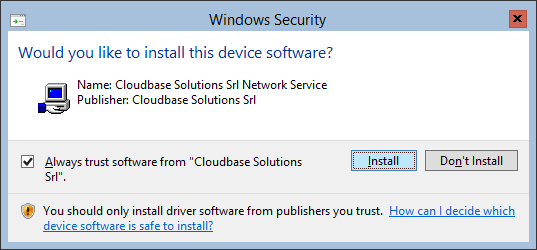

17. Install OpenStack on Hyper-V

Configures remotely a Hyper-V external virtual switch and installs the OpenStack compute node with the Nova installer.

$BASEDIR/deploy-hyperv-compute.sh $HYPERV_COMPUTE_VM_IP $HYPERV_ADMIN $HYPERV_PASSWORD $OPENSTACK_RELEASE \

$HYPERV_VSWITCH_NAME $GLANCE_HOST $RPC_BACKEND $QPID_HOST $QPID_USERNAME $QPID_PASSWORD $NEUTRON_URL \

$NEUTRON_ADMIN_AUTH_URL $NEUTRON_ADMIN_TENANT_NAME $NEUTRON_ADMIN_USERNAME $NEUTRON_ADMIN_PASSWORD \

$CEILOMETER_ADMIN_AUTH_URL $CEILOMETER_ADMIN_TENANT_NAME $CEILOMETER_ADMIN_USERNAME $CEILOMETER_ADMIN_PASSWORD \

$CEILOMETER_METERING_SECRET

Here are some details from this script.

Create a Hyper-V external virtual switch using Powershell remotely.

$BASEDIR/wsmancmd.py -U https://$MGMT_IP:5986/wsman -u "$HYPERV_USER" -p "$HYPERV_PASSWORD" \

powershell -NonInteractive -Command '"if (!(Get-VMSwitch | where {$_.Name -eq \"'$SWITCH_NAME'\"})) \

{New-VMSwitch -Name \"'$SWITCH_NAME'\" -AllowManagementOS $false -InterfaceAlias \

(Get-NetAdapter | where {$_.IfIndex -ne ((Get-NetIPAddress -IPAddress \"'$MGMT_IP'\").InterfaceIndex)}).Name}"'

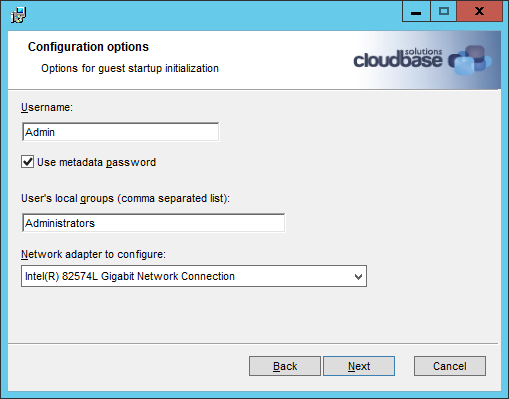

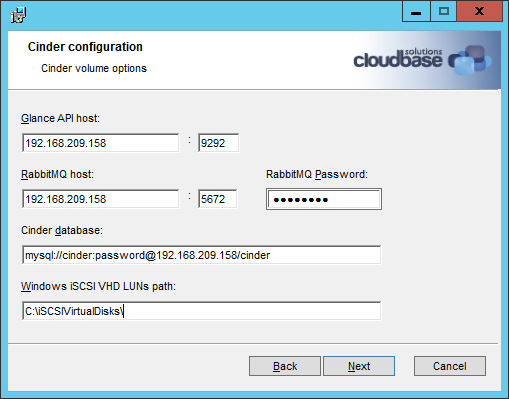

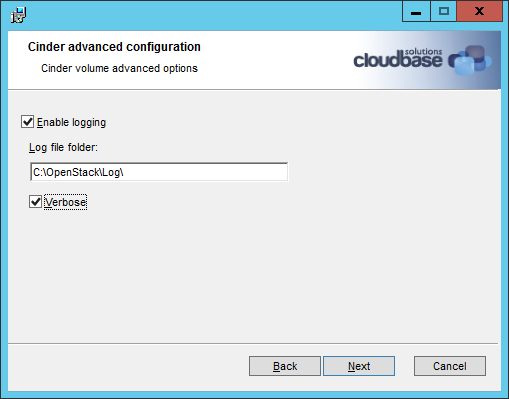

Run the Nova installer remotely in unattended mode.

run_wsmancmd_with_retry $HYPERV_COMPUTE_VM_IP $HYPERV_ADMIN $HYPERV_PASSWORD "msiexec /i %TEMP%\\$MSI_FILE /qn /l*v %TEMP%\\HyperVNovaCompute_setup_log.txt \

ADDLOCAL=$HYPERV_FEATURES GLANCEHOST=$GLANCE_HOST GLANCEPORT=$GLANCE_PORT RPCBACKEND=$RPC_BACKEND \

RPCBACKENDHOST=$RPC_BACKEND_HOST RPCBACKENDPORT=$RPC_BACKEND_PORT RPCBACKENDUSER=$RPC_BACKEND_USERNAME RPCBACKENDPASSWORD=$RPC_BACKEND_PASSWORD \

INSTANCESPATH=C:\\OpenStack\\Instances ADDVSWITCH=0 VSWITCHNAME=$HYPERV_VSWITCH USECOWIMAGES=1 LOGDIR=C:\\OpenStack\\Log ENABLELOGGING=1 \

VERBOSELOGGING=1 NEUTRONURL=$NEUTRON_URL NEUTRONADMINTENANTNAME=$NEUTRON_ADMIN_TENANT_NAME NEUTRONADMINUSERNAME=$NEUTRON_ADMIN_USERNAME \

NEUTRONADMINPASSWORD=$NEUTRON_ADMIN_PASSWORD NEUTRONADMINAUTHURL=$NEUTRON_ADMIN_AUTH_URL \

CEILOMETERADMINTENANTNAME=$CEILOMETER_ADMIN_TENANT_NAME CEILOMETERADMINUSERNAME=$CEILOMETER_ADMIN_USERNAME \

CEILOMETERADMINPASSWORD=$CEILOMETER_ADMIN_PASSWORD CEILOMETERADMINAUTHURL=$CEILOMETER_ADMIN_AUTH_URL \

CEILOMETERMETERINGSECRET=$CEILOMETER_METERING_SECRET"

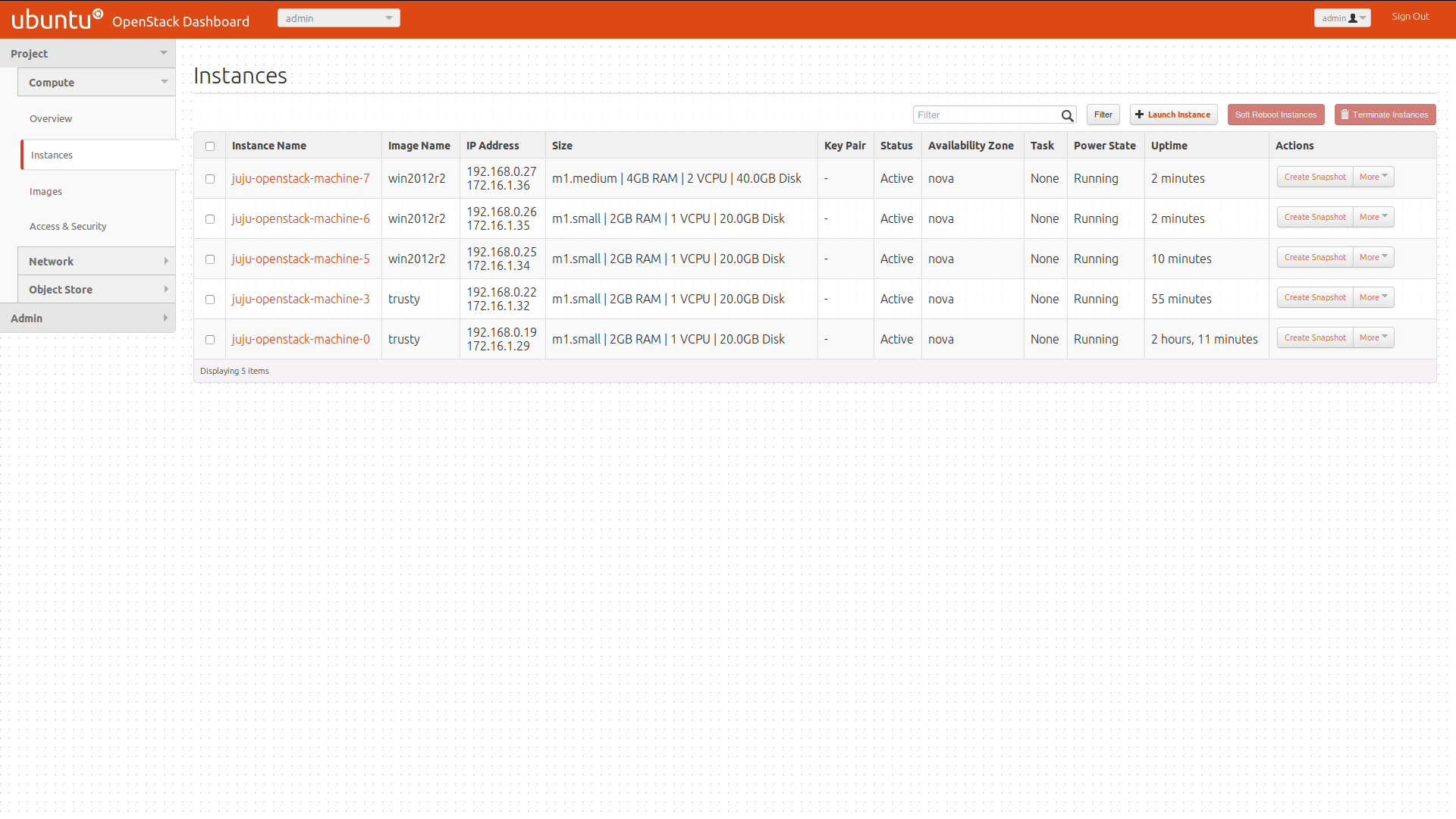

18. Make sure everything is running fine!

Check if all Nova services are running (including the optional KVM and Hyper-V compute nodes):

run_ssh_cmd_with_retry $RDO_ADMIN@$CONTROLLER_VM_IP "source ./keystonerc_admin && nova service-list | \

sed -e '$d' | awk '(NR > 3) {print $10}' | sed -rn '/down/q1'" 10

An finally check that all the Neutron agents are up and running:

run_ssh_cmd_with_retry $RDO_ADMIN@$CONTROLLER_VM_IP "source ./keystonerc_admin && \

neutron agent-list -f csv | sed -e '1d' | sed -rn 's/\".*\",\".*\",\".*\",\"(.*)\",.*/\1/p' | sed -rn '/xxx/q1'" 10

The post Multi-node OpenStack RDO on RHEL and Hyper-V – Part 2 appeared first on Cloudbase Solutions.

![]()

![]()